Checking Accuracy and Bias

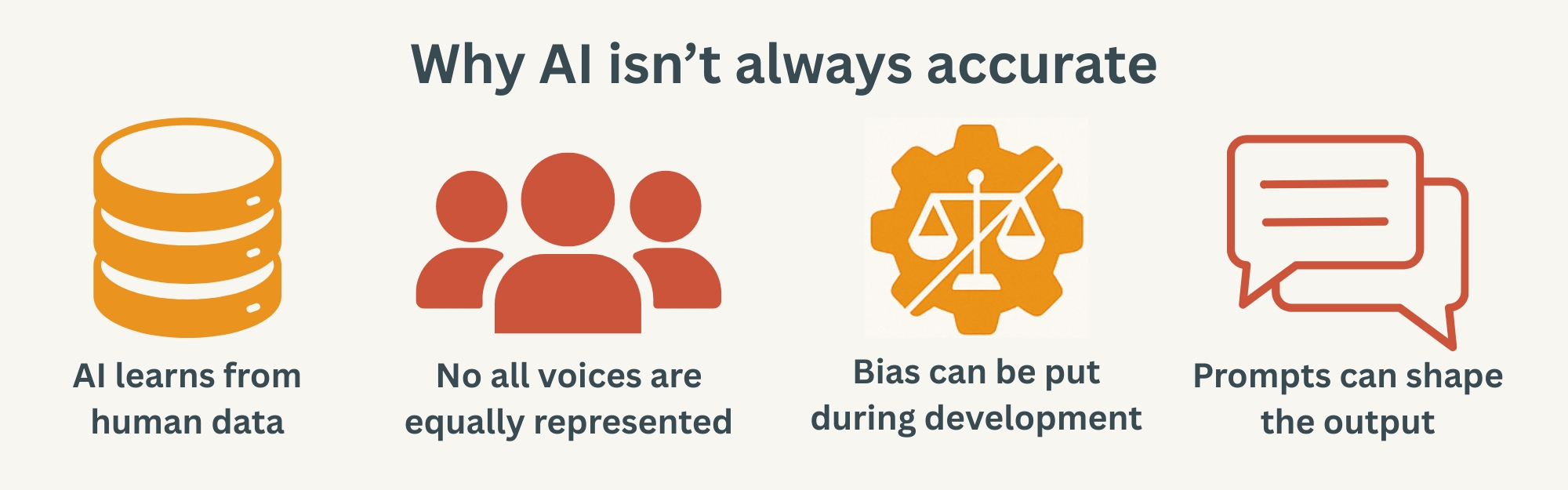

Why isn't AI always accurate?

Even though AI can sound confident, its answers aren’t always right. Here are four main reasons why:

-

AI learns from human data

AI is trained on information created by people, which can include errors, outdated facts, or opinions presented as truth. -

Not all voices are equally represented

Some groups, perspectives, and experiences appear less often in the data AI learns from. This means AI may overlook or misrepresent certain viewpoints. -

Bias can be introduced during development

Choices made by developers, such as what data to include or how to filter responses, can unintentionally add bias into the system. -

Prompts can shape the output

The way you ask a question heavily influences the answer. Vague or leading prompts can produce incomplete, misleading, or biased results.

Learn how to write more accurate and inclusive prompts in the section below.

Checking Accuracy and Bias

You are responsible for the AI content that you produce. This means you MUST proofread AI responses, verify their correctness, and check for bias. Apply these checks to help ensure your AI generated content is accurate and appropriate:

Fact-check key details

Use trusted sources (e.g., government sites, college policies, academic databases) to confirm names, dates, statistics, and claims. Don’t assume AI is correct.

Faulty Prompt: “When did the UK leave the EU? Give me a date.” (Too open; AI may give a plausible but wrong date.)

Example: AI says “The UK formally left the EU on January 1st, 2021, following a referendum in 2017.”

What’s Wrong: Sounds credible, but the referendum was in 2016 and Brexit occurred on 31 Jan 2020 with a transition period.

Ensure balance and representation

If an answer feels biased or incomplete, reword your prompt. Phrases like “Give a balanced overview” or “Include multiple perspectives” can help.

Faulty Prompt: “Why should everyone choose online learning?” (Encourages only one viewpoint.)

Example: AI says “Online learning is the best because it’s flexible and convenient.”

What’s Wrong: Only presents the positives. A balanced response would also cover potential drawbacks, such as reduced face-to-face interaction, tech access issues, and the benefits of in-person learning, so readers can see multiple sides of the issue.

Ask follow-up questions

Request clarification or extra detail. Questions like “Can you expand on that?” or “What’s another perspective?” encourage more complete answers.

Faulty Prompt: “What caused the French Revolution?” (Stops at a short or surface-level answer.)

Example: AI says “The main cause of the French Revolution was inequality between social classes.”

What’s Wrong: Oversimplifies – omits other causes like economic crisis, political conflict, and Enlightenment ideas.

Write neutral, inclusive prompts

Avoid leading or vague questions. Instead of “Why is X the best?”, ask “What are the advantages and disadvantages of X?”

Faulty Prompt: “Why is renewable energy better than fossil fuels?” (Pushes AI toward a biased answer.)

Example: AI says “Renewable energy is always better and has no real disadvantages.”

What’s Wrong: Skips challenges like cost, storage, and reliability.

Check tone and suitability

Review the language for audience, formality, and suitability. AI can sound too casual, overconfident, or not fit for purpose.

Faulty Prompt: “Make my climate change report sound cool.” (Encourages an informal tone.)

Example: AI writes “Your essay is awesome and will blow your tutor’s mind!”

What’s Wrong: Too casual for academic work.

Compare different tools

Not all AI works the same. If something seems off, try the same prompt in another tool to spot inconsistencies.

Faulty Prompt: “What’s the longest river in the world?” (One tool may give a different answer than another.)

Example: Tool A says “The Nile”, Tool B says “The Amazon”.

What’s Wrong: Without checking a trusted source, you can’t be sure which is correct.

Be aware of the difference between fair use and when AI use might be considered an unfair advantage. As long as you follow the Assessment and Plagiarism Policies on the Documents and Policies website, you are encouraged to use generative AI to support your learning.

Learn more on how to reference the use of AI tools in your academic writing properly on the “Referencing of AI tools” page.

Frequently Asked Questions (FAQs)

I want to learn more about Generative AI.

If you are interested in how generative AI works in more detail, Google have produced an excellent explanation video:

You can also find out more information here on Google’s Blog feature: Ask a Techspert.

Or finally, you could ask AI to explain it itself by signing in to Copilot using your college account.

Can I use AI to help with my assignments?

Using AI as a starting point is a good practice. Asking the AI about the subject of your assignment to receive additional insight and help on where to start can be extremely useful as starting assignments is often the hardest part.

You just need to make sure you do not copy and paste the information the AI gives you into your assignment. If you want to use some of the AI output in your works, make sure it is allowed by the teacher and referenced properly.

Will I get in trouble or a disciplinary for using AI?

If you follow the guidance provided using AI correctly within the college’s guidance and plagiarism policy, you will not need to worry about getting into trouble.

However, if you choose to ignore this and use AI in your work by copying and pasting full assignments or parts of them, you may face disciplinary action.